Visual Interpretation for "Six Seasons" | Art & Science

The Chukchi Sea, north of Alaska, is one of the most inaccessible places to humans on earth. Six seasons in the Arctic, according to the Inuit, are not demarcated by a fixed calendar, but by what we hear in the changing environment. Hydrophones were placed about 300 meters below the sea surface at a seafloor recording location 160 km north of Utqiagvik, Alaska. They capture the sound of sea ice, marine mammals, and the underwater environment throughout an entire year. Our journey begins on October 29, 2015, just three days after new ice had started to form – the birth of ice. ---- By Lei Liang and Joshua Jones

"Six Seasons" is a collaborative research project directed by Lei Liang and Joshua Jones, where I (Mingyong Cheng) create a visual interpretation using generative AI and visual computing for an experimental and interdisciplinary music series in 2024. This work guides listeners through the six seasons of the Chukchi Sea, north of Alaska, where Liang and Jones's team integrate real-world recordings of sea ice, marine mammals, and underwater environments with speculative sounds crafted by performers.

Abstract

"From Speculative Soundscape to Landscape: The Visual Interpretation of 'Six Seasons'" introduces a novel approach to environmental data visualization by transforming Arctic hydrophone recordings into dynamic, culturally informed landscapes. This research addresses the challenge of representing remote ecological data—particularly for regions inaccessible to people—through an artistic lens that bridges Western scientific inquiry with Eastern aesthetic traditions.

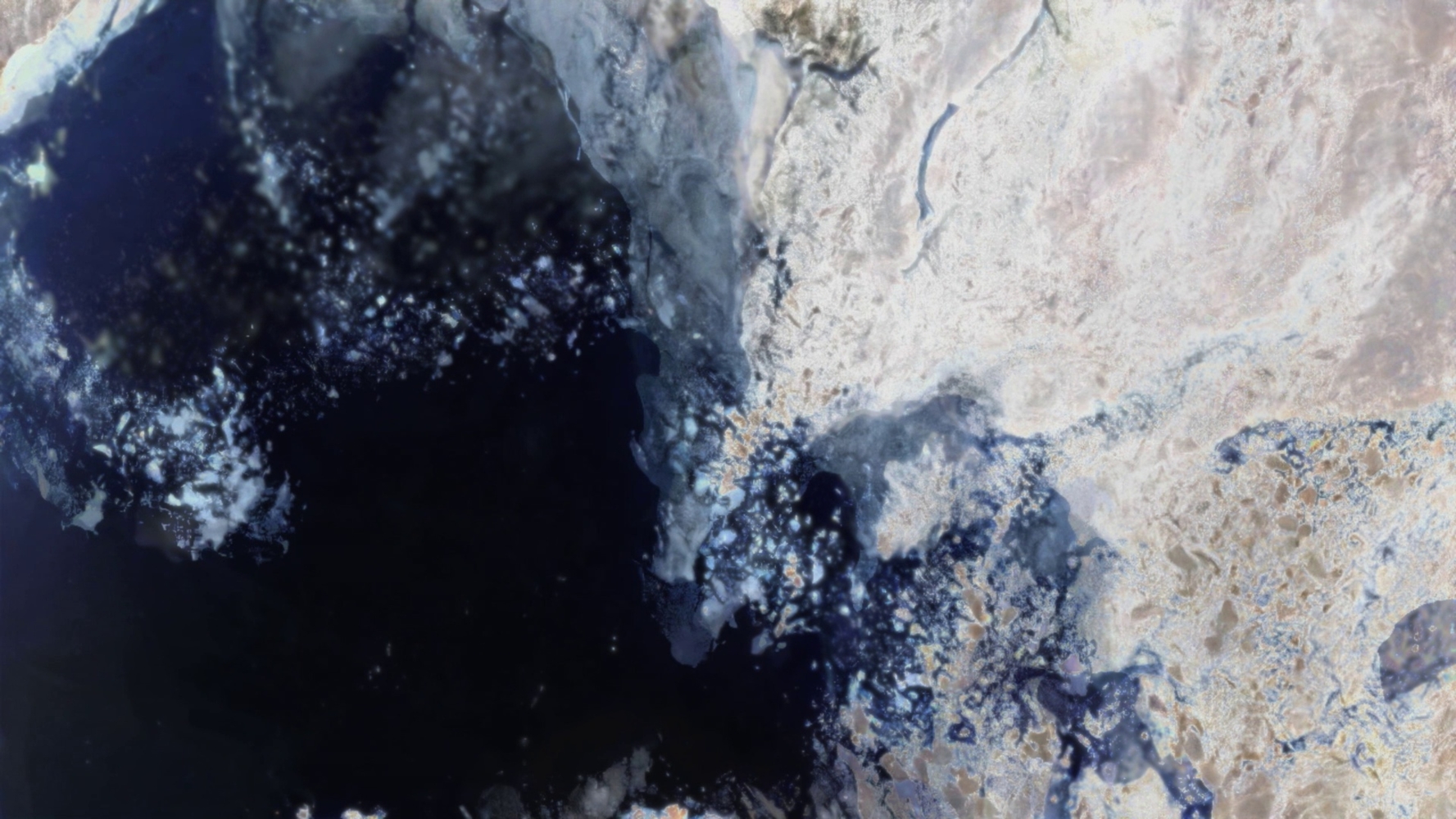

The project interprets recordings captured 300 meters below the Chukchi Sea surface, merging them with musical compositions to create audio-reactive visual experiences. The visualization methodology combines three essential components: processing NASA satellite imagery, fine-tuning generative AI models to synthesize art that blends Arctic icescapes with stylistic elements of traditional Chinese Shan-shui painting, and real-time audio-visual synthesis through Stream Diffusion and TouchDesigner. These methods translate environmental data into contemplative forms that dynamically respond to the acoustic structure of the composition.

This work advances the field of environmental storytelling by demonstrating how AI-driven visualizations can enhance scientific-artistic collaboration while preserving the integrity of both scientific data and cultural traditions. By offering new strategies for representing ecosystems in regions inaccessible to traditional observation methods, this project reveals the potential of blending computational techniques with Shan-shui-inspired aesthetics. The resulting framework transforms raw environmental data into meaningful, culturally resonant visual narratives, illustrating how technology can mediate between empirical measurement and creative interpretation.

Introduction

The Chukchi Sea, north of Alaska, is one of Earth's most inaccessible regions. The Inuit recognize six Arctic seasons defined by environmental changes, not fixed dates.[^1] Hydrophones placed 300 meters below sea level, 160 km north of Point Barrow, captured year-round recordings of sea ice, marine mammals, and the underwater soundscape.[^2]

In Six Seasons, composer Dr. Lei Liang and oceanographer Dr. Joshua Jones integrate these recordings with live performance by Marco Fusi (violin and viola d'amore). Using improvisation and specific instrumental techniques, performers create sonic gestures inspired by bowhead whale calls, sea ice formation, and beluga songs, bringing this remote underwater world into concert spaces.[^2] This artistic interpretation allows audiences to both hear and feel the powerful forces at work in this extreme environment.

In 2024, new media artist Mingyong Cheng joined Dr. Liang's team to develop an audio-visual experience. The original visual component featured high-resolution NASA satellite images showing seasonal changes across six Arctic sites, documenting ice fracturing, ocean currents, and coastal erosion. While scientifically accurate, these fixed perspectives limited the artistic interpretation Dr. Liang envisioned. He noted: "These satellite views mirror Shan-shui-hua—not just landscapes, but nature's own brushwork."

This observation inspired Cheng to incorporate "Shan-shui" principles but raised a challenge: How could they apply these principles to a place none had physically visited? Traditional Chinese landscape painting draws from artists' lived experiences and memories of mountains and rivers, yet the Arctic existed only as data—hydrophone recordings, satellite images, and scientific interpretations.

Cheng recognized parallels with her earlier project Fusion: Landscape and Beyond,[^4] where she used generative AI to synthesize “cultural memory” by fine-tuning models on Shan-shui paintings to explore a speculative vision of the past, present, and future. Could generative AI similarly reimagine environmental data as speculative Shan-shui, creating imagined landscapes of this remote place? How might these speculative landscapes preserve Arctic features while embracing Shan-shui aesthetics? Additionally, how could visual and audio elements interact to represent dynamic ecosystem changes across seasons?

Methodology

To answer the proposed research questions, the team designed three key features for this visual experience:

- Transcultural Visual Synthesis: Blending Arctic aesthetics with Shan-shui painting motifs, creating moments where each appears distinctly and instances where they merge.

- Speculative Landscape Creation: Deliberately layering synthetic (AI-generated) and authentic imagery. This approach mirrors Dr. Liang's composition methodology and conceptually echoes layered brushstroke techniques explored by Chinese landscape painter Huang Binhong and calligrapher Wang Dongling. The six videos function as "Shan-shui" interpretations of the Arctic.

- Dynamic Visual Transformation: Implementing audio-visual reactive design inspired by the "shifting perspectives" technique in Chinese landscape painting, transforming static representation into animated changes across multiple dimensions to depict the fluid ecosystem.

Using Generative AI and Creative Computing

The Six Seasons composition comprises seven musical pieces: Seasons 1 through 6 based on environmental sonic changes, plus a Coda that embeds Dr. Liang's narrative about a beluga whale separated from its group, wandering the ocean in search of reunion.

For Seasons 1-6, the generative AI implementation followed a systematic process:

- Model Fine-tuning: Cheng first fine-tuned six separate LoRA for Stable Diffusion models, each trained with authentic images representing a specific Arctic season.[^5] This created six unique visual "dialects" that could generate images in the style of each season's icescape.

- Hybrid Image Generation: These LoRAs were then combined with a model fine-tuned on traditional Chinese landscape paintings to generate six composite paintings that maintained the structure of Shan-shui while incorporating the textures of Arctic icescapes.

- Resolution Enhancement: The generated images were upscaled to 8K resolution using Topaz Photo AI,[^7] creating foundational visual layers that subtly embed within the overall experience—intentionally designed not to be immediately apparent at first glance.

The audio-reactive system, designed in TouchDesigner,[^8] layered these generated landscape paintings with satellite imagery collections from each season. Cheng analyzed each season's musical composition by isolating "mid," "high," and "snare" signals alongside linear timestamps to drive specific visual effect parameters, including ice formations, ocean-land transformations, and particle systems.

This process involved close collaboration with Dr. Liang and Dr. Jones. Dr. Liang suggested creating a rhythmic cadence in the visual experience that reflected the natural pace of environmental changes, avoiding rapid visual blooms that might typically accompany reactive audio-visual work. Dr. Jones contributed oceanographic expertise, recommending the integration of color and brightness changes based on seasonal timeseries of sun angles and daylight patterns.[^1]

Each season features distinct audio-visual design elements based on thematic exploration. The revelation of Chinese landscape painting aesthetics was achieved through creative coding in TouchDesigner, establishing spiritual connections between Arctic landscapes and Shan-shui traditions.

For the Coda, which represents an imagined space without specific image data, Cheng and Dr. Liang decided to emphasize "Shan-shui" aesthetics more prominently—revealing the conceptual connections embedded throughout the previous seasons. Cheng merged all six seasonal LoRAs and implemented real-time generative AI using StreamDiffusion-TD in TouchDesigner.[^9] This system generates icescapes guided by Simplex noise inputs (simulating the topography) that react to musical elements. The final composition transitions between different aesthetic states, concluding with a blurred spectral imagery of the wandering whale, directly connecting to the narrative arc of the music.

Reflection

Six Seasons reimagines environmental storytelling by merging generative AI, Chinese Shan-shui art, and Arctic science to create an immersive encounter with Earth’s most inaccessible regions. Like astronauts sharing Earth’s first orbital portrait, we stand alongside bowhead whales and shifting ice through hydrophone data and painterly imagination. Audiences witness the Arctic transformed through Shan-shui principles: satellite imagery reshaped into mist-clad peaks, beluga migrations rendered as calligraphic gestures.

Here, scientists supply ecological data, while artists shape and curate generative AI outputs within scientific and cultural constraints. This interdisciplinary “echolocation” rejects passive documentation: ice sings, landscapes breathe, and migrations unfold across inhuman timescales. Crucially, AI serves as a bridge, not a replacement, for artistic interpretation—constrained by satellite data and brushwork traditions to avoid aesthetic dilution. Six Seasons becomes both a gentle and urgent love letter to Earth, confronting us with the fragility and tenacity of polar ecosystems.

Footnotes:

- Nunavut Planning Commission. 2021 Draft Nunavut Land Use Plan, July 2021, Figure 4: Generalized Annual Cycles of Snow, Ice, Water, and Light, p. 12. https://www.nunavut.ca/sites/default/files/21-001e-2021-07-08-2021_draft_nunavut_land_use_plan-english.pdf.

- QI Audio-Visual and Communications Teams. “Listening to the Arctic: A Composer, Musician, and Oceanographer Reflect on ‘Six Seasons’ (with Video),” May 29, 2024. https://qi.ucsd.edu/listening-to-the-arctic-a-composer-musician-and-oceanographer-reflect-on-six-seasons-with-video/

- Kairos Music. An interview featuring Dr. Lei Liang, oceanographer Dr. Joshua Jones, and performer Marco Fusi on their musical exploration is available alongside the album here: https://kairos-music.com/products/recording/0022054kai. Due to copyright restrictions, the booklet can only be viewed after logging in through this link.

- Mingyong Cheng. “Fusion: Landscape and Beyond.” https://www.mingyongcheng.com/projects/fusion-landscape-and-beyond. In collaboration with Xuexi Dang, Xinwen Zhao, Zetao Yu.

- LoRA for Stable Diffusion is a lightweight fine-tuning method that streamlines adapting large models like Stable Diffusion to custom datasets. See “Using LoRA with Diffusers,” Hugging Face. https://huggingface.co/blog/lora and the original research paper: https://arxiv.org/abs/2106.09685.

- DreamBooth: Fine-Tuning Text-to-Image Diffusion Models for Subject-Driven Generation. https://arxiv.org/abs/2208.12242. For combined workflows with LoRA: https://github.com/AUTOMATIC1111/stable-diffusion-webui.

- Tools for image enhancement include Stable Diffusion WebUI’s native enhancer (https://github.com/AUTOMATIC1111/stable-diffusion-webui), Topaz Photo AI (https://www.topazlabs.com/topaz-photo-ai), and Adobe Photoshop Neural Filters (https://www.adobe.com/products/photoshop/neural-filter.html).

- TouchDesigner, a visual development platform for real-time media: https://derivative.ca/

- StreamDiffusion-TD by dotsimulate (https://www.patreon.com/c/dotsimulate/home) is a TouchDesigner plugin based on StreamDiffusion (https://arxiv.org/abs/2312.12491) for generating real-time images.

See the full collection below:

If the embedded showcase could not display normally on your device, please visit the showcase via: https://vimeo.com/showcase/11366903

Credit:

This project is led by Composer and Artistic Director Lei Liang, with advice from Oceanographer and Principal Scientific Advisor Joshua Jones. The violin and viola d’amore are performed by Marco Fusi, while the audio systems are designed and software developed by Charles Deluga. Audio software development is handled by Zachary Seldess. New media artist Mingyong Cheng responds to "Six Seasons" soundscape by reimagining the Arctic through creative computing and generative AI, merging the serene motifs of traditional Chinese paintings with Arctic landscapes.

Publication & Performance:

This series was published as a QR code access in Lei Liang's Six Seasons album booklet, published by KAIROS.

Performance at Experimental Theater, UC San Diego.

Season 4 included in the DVD of the book: 走向新山水:梁雷音乐文论与作品评析